Zeitgeist coffeehouse, corner of Jackson Street and Second Ave. S., Pioneer Square, Seattle, Washington. Photo by Joe Mabel.

Early last month I ran across a post called “Beware the zeitgeister” by Seth Godin, the senior member of the Godin/Shirky/Joel axis of totally bald-headed media/marketing gurus. Godin’s characteristically delphic utterance (commendably shorter than the bloated post you’re reading now) warned against personalities who care only “about what’s trending now,” and who will “interrupt a long-term strategy discussion to talk urgently about today’s micro-trend instead.” And he closes with this:

The challenge, of course, is that the momentary zeitgeist always changes. That’s why it’s so appealing to those that surf it, because by the time it’s clear that you were wrong, it’s changed and now you can talk about the new thing instead.

However much I sympathize with the post’s core idea, I must confess that Godin hung me up a bit with the term “momentary zeitgeist.” Is such a thing even possible?, I wondered. Then, almost in real time, I ran across another instance of the z-word in a post by Catherine Taylor, the witty social-media gadfly of MediaPost, who, in a rumination on a tweet dreamed up by Proctor & Gamble to take advantage of the recent, temporary mass insanity surrounding the domicile decisions of LeBron James, noted that all P&G had done was “to appropriately piggyback on something in the zeitgeist that’s been brewing over the last few days.”

Disturbed, I ran to fetch my dog-eared copy of Scribner’s five-volume 1973 classic Dictionary of the History of Ideas, a decidedly analog relic of my nerdy young adulthood (and now, somewhat to my amazement, available complete online at a moderately difficult-to-navigate site at the University of Virginia). Not surprisingly, the entry for “Zeitgeist“ — written by Nathan Rotenstreich, a professor of philosophy at Hebrew University — is the last entry in the dictionary, and I here take the liberty of quoting its first paragraph in full:

Along with the concept of Volkgeist we can trace in literature the development of the cognate notion of Zeitgeist (Geist der Zeit, Geist der Zeiten). Just as the term Volkgeist was conceived as a definition of the spirit of a nation taken in its totality across generations, so Zeitgeist came to define the characteristic spirit of a historical era taken in its totality and bearing the mark of a preponderant feature which dominated its intellectual, political, and social trends. Zeit is taken in the sense of “era,” of the French siècle. Philosophically, the concept is based on the presupposition that the time has a material meaning and is imbued with content. It is in this sense that the Latin tempus appears in such phrases as tempora mutantur. The expression “it is in the air” is latently related to the idea of Zeitgeist.

The good Dr. Rotenstreich — who also wrote the Ideas dictionary’s entry for “Volksgeist“ and appears to have intended the two articles as a pair — walks readers at length through the philosophical pedigree of both terms: the connotative ties of the German Geist to the Hebrew ruah, the Greek pneuma, and the Latin spiritus; the early emergence of the concepts of a “spirit” of nations and of eras in the work of Enlightenment thinkers such as Montesquieu, Voltaire, and Kant; the solidification of the two concepts in the thought of Johann Gottfried von Herder, and their flowering in the world-historical philosophy of Hegel. The main point for now is that, as the quote above suggests, the understanding of “Zeitgeist” is that it denotes the spirit of an era or an age, and that the time itself “has a material meaning and is imbued with content.” That seems very far away from the notion of the two commentators quoted at the outset, who apparently find “zeitgeist” a convenient catchword for what’s hot at the moment — a river of ever-changing “trending” topics that one can mine for a quick reading in the way one reads the car’s oil level from the dipstick.

So when did “Zeitgeist” lose its original historical usefulness — when, if you will, did it lose its initial capital and its italics — and become a buzzword for whatever is trending on the Web at the moment? As with so many of the mixed blessings of the modern age, the temptation is to lay this one at the doorstep of Google, which, in 2001, first released Google Zeitgeist, an online tool that allowed visitors to see what users were predominantly searching for at any given time. The real-time version of Zeitgeist was replaced in 2007 by Google Trends, but Google still releases a yearly review of hot search terms under the Zeitgeist brand. It seems reasonable (at least for a toffee-nosed snob like me) to suspect that this feature may have marked the first encounter of many in the population with any form of the term “Zeitgeist.”

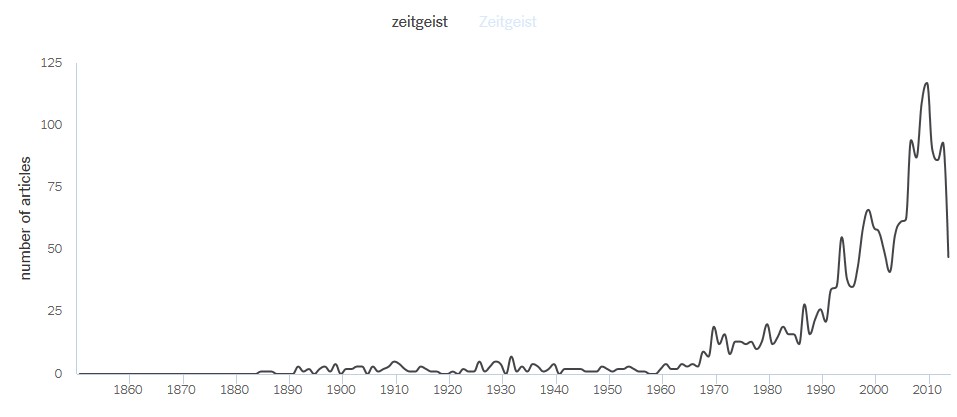

Fortunately, we can test the hypothesis with the new and sublimely time-wasting NYT Chronicle toy dreamed up by the Web wizards at The New York Times. NYT Chronicle lets you enter a word or words in a search blank, and spits out a simple line graph showing the percentage or number of Times articles in which the word has appeared, across the Paper of Record’s entire printed history since 1851. (Nota bene: With NYT Chronicle, we can finally bring some statistical rigor to the unsupported proposition of Keats that “Beauty is Truth, Truth Beauty.” The answer from NYT Chronicle is a qualified yes — Beauty is Truth, but only for a limited period from 1927 to 1965.)

I dropped “zeitgeist” into NYT Chronicle, and here is what I ended up with:

Conclusion: The use of the term did see a big jump after ~2003 that could plausibly relate to the Google product — but it’s also clear that “zeitgeist” was trending up in the pages of the Times long before then. (The rise after 1988 may also be partly due to the formation of the motion picture distributor Zeitgeist Films, the name of which appears frequently in the credits section of Times film reviews.) The apparent, precipitous decline at the end of the series simply reflects the fact that we’re only a bit more than half done with 2014.

Of course, the number of articles on the Times chart is rather small; apparently there’s only so much zeitgeist the Gray Lady can handle, even in the bumper-crop year of 2010 (117 articles). The broader sample raked through by Google News, meanwhile, finds, in the past month or so, more than 850 articles containing “zeitgeist” (including, it must be said, many duplicates). And a quick, pseudo-random trawl through a few of those comes up with some real humdingers:

Does our fashion photography look good enough? I trust Tomas Maier’s opinion on this more than almost anyone’s. And there are many a modern swan who I’m zinging emails at to make sure the mag touches that zeitgeist: Alexandra Richards, Liz Goldwyn, Kick Kennedy.—From a New York Observer interview with Jay Fielden, editor of Town & Country

[M]any of these records captured, as most truly great albums somehow do, the very essence of the time, its aura, its being, its, for lack of a better word, zeitgeist.—“Ed Kowalczyk to Celebrate 20 Years of Throwing Copper at Paramount“, Long Island Press

It is all about pastels, possums! In what is chalked up as a throwback to the 1950s, purple hair has re-entered the zeitgeist with more gusto than the twist at a sock hop.—“Hair styling hits a purple patch as kooky becomes cool“, Sydney Morning Herald

There is even — yes, you saw this coming — a recent article on Sports Illustrated‘s SI.com titled “The Zeitgeist of LeBron James.”

The popularity of “zeitgeist” is easy enough to understand; it’s one of those words that make one seem just a little smarter than they actually are, and thus are catnip for insecure writers like me. And, of course, given the ceaseless deluge of screen media and the nanosecond attention spans it’s engendering, some sort of term was needed to cover the Spirit of the Instant. What a shame that the arbiters of media taste didn’t just create a new one — “InstaGeist,” perhaps, or, even better, “Augenblickgeist,” the “Spirit of the Eyeblink.”

Because what’s most troubling about the cheapening of “Zeitgeist” is not so much its extension to ever more trivial twists and turns on social media, as it is the nagging feeling that we don’t really have any use for the original sense anymore. When we think, if at all, about how future historians will catalog the current age, we have a vague sense that the rise and prevalence of technology will constitute the commanding theme. But how will the era stack up culturally — indeed, will there be a definable cultural “era” at all — and what will be the unit of time that ultimately “has a material meaning and is imbued with content”? Are we approaching asymptotically, with each passing, media-saturated day, a situation where that characteristic time equals zero — where the content is so overwhelming, and the time to which it’s attached so short, that the Augenblickgeist is the only Geist that has any relevance?

“How shall a generation know its story,” wrote Edgar Bowers in his poem “For Louis Pasteur,” “if it will know no other?” It would be ironic indeed if the rise of the “momentary zeitgeist,” so rooted in the immediate present, ultimately marked the main defining theme of our real … Zeitgeist.